Image sensors convert light into electrons to generate images in digital cameras; understand the operation and meaning of acronyms such as CMOS, APS-C and ToF

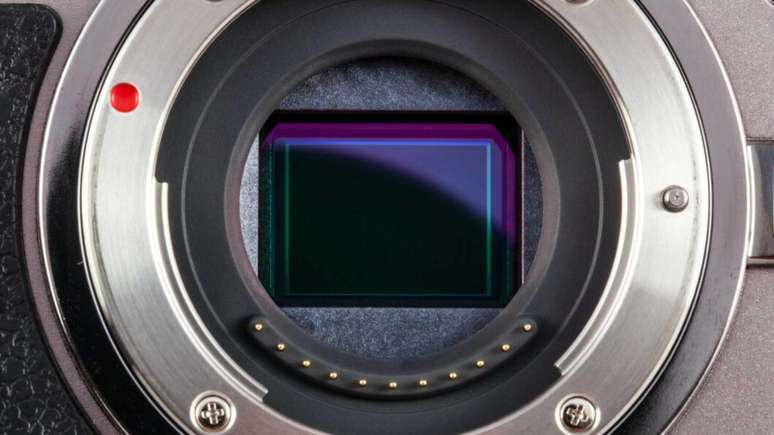

OR image sensor A camera is a device that converts light into a digital image. Its function is to capture the light received by the lens, generating an emission of electrons which is amplified and transformed into a photo.

Index

Image sensors found in digital cameras vary in characteristics such as size, type, resolution, sensitivity, dynamic range, and pixel size. Understand the operation of a sensor and the main specifications related to the component.

What does the image sensor in a digital camera do?

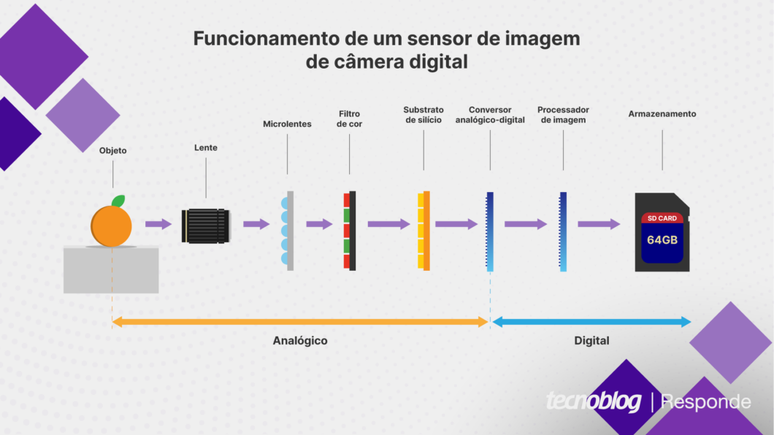

Camera sensors transform light into an image using the photoelectric effect: light passes through the lens and strikes a layer of silicon, which generates an electrical signal. This signal passes through the analog-to-digital converter and is transformed into a photo by the image processor.

Every digital camera sensor has three layers: the substrate, a color filter, and microlenses.

OR substrate measures the intensity of light: it has small cavities (pixels) that hold incoming light and allow it to be measured. The substrate itself is monochromatic: it can only measure the light it collects, not determine color.

OR color filter it sits on top of the substrate and allows only a certain color to enter each pixel. The Bayer filter is the most common type, with an alternating red-green and green-blue RGB arrangement.

To the microlenses they’re over the Bayer filter and direct the light onto a single pixel, preventing it from falling into the space between two pixels (where it wouldn’t be measured).

CCD and CMOS, the most common types of sensors

CMOS is the most common sensor in consumer cameras, including cell phones, mirrorless cameras, and DSLRs. The CCD, although present in consumer electronics, is currently more used in professional applications.

In summary:

- CMOS (complementary metal oxide semiconductor): transforms light into an electrical signal, which is amplified inside the pixel and generates the digital signal that represents the image. This sensor is smaller and cheaper, but the possibility of noise in photos is higher;

- CCD (charge coupled device): the sensor collects light and converts it into an electrical charge, which is amplified outside the sensor and sent to an analog-to-digital converter. Image quality is generally superior, as is the cost of production.

Sensor sizes on cameras: full frame, APS-C, micro four thirds

Camera image sensors often come in standardized sizes, measured in fractions of an inch or denoted by acronyms such as APS-C and M4/3. The most common models are:

- full frame: measures 36×24mm – these are the full dimensions of a 35mm motion picture film. Allows for a shallower depth of field in photos. It serves as a reference for the size of other sensors, measured by the crop factor;

- APS-H (Advanced Photo System type H): it measures 27.9 x 18.6 mm, with a crop factor of 1.3x, which is about 1.3 times smaller than a full-frame sensor. This size was used on some cameras in the Canon EOS-1D line;

- APS-C (Type C Advanced Camera System): measures 25.1 x 16.7 mm, with a crop factor of 1.6x. It has a 3:2 aspect ratio and is used in DSLR and mirrorless cameras from various brands, such as Canon, Nikon and Sony;

- Micro Four Thirds (Four Thirds or M4/3): measures 17.3 x 13.5 mm, with a crop factor of approximately 2. It has a 4:3 aspect ratio and was created by Olympus and Panasonic in 2008 for mirrorless interchangeable-lens cameras;

- 1 inch: measures 13.2×8.8mm, with a crop factor of 2.7x. It is being used in compact cameras, such as the Sony RX100 and RX10, and is appearing in mobile phones such as the Xiaomi 13 Ultra, Oppo Find X6 Pro and Vivo X90 Pro;

- 1/1.3 inch: measures approximately 9.8×7.3mm, with a crop factor of 3.6x. It is used in smartphones such as iPhone 14 Pro Max, Samsung Galaxy S23 Ultra and Xiaomi 12 Pro;

- 1/2.3 inch: measures 6.17 × 4.55 mm, with a crop factor of 5.6x. This format has been used in all generations of GoPro up to the Hero10 Black, several DJI Mavic drone models, and several Sony Xperia phones;

- 1/2.55 inch: measures 5.6×4.2mm, with a crop factor of 6.4x. It was present in the main rear camera of the Galaxy S7 to S10, the Pocophone F1 and the iPhone XR, XS and XS Max;

- 1/3 inch: measures 4.80 × 3.60 mm, with a crop factor of 7x. It was used by Apple from iPhone 5S to iPhone X;

- medium format: measuring between 36×24mm and 130×100mm – it is a sensor with dimensions between full-frame and large format. It is used in Hasselblad, Fujifilm and Pentax cameras.

ISO, resolution, dynamic range and other specifications

There are several specifications related to an image sensor. In addition to type (CCD or CMOS) and size (full frame, APS-C, micro four thirds, etc.), the following terms are common:

- ISOs: indicates the light sensitivity of a sensor. An ISO 1600, for example, means that the shutter has to stay open twice as long as with an ISO 3200 sensor to capture the same amount of light in a photo;

- resolution: how many pixels a camera can capture, usually measured in megapixels;

- pixel size: is the size of the photosite, i.e. the area of the pixel that captures the light. It is measured in micrometres (µm). In general, the larger the pixel size, the higher the image quality;

- crop factor: ratio of the diagonal of the full-frame sensor (43.3 mm) to that of a given sensor. For example, the diagonal of a full-frame sensor is twice the diagonal of an M4/3 sensor, so its crop factor is 2;

- frame rate (fps): the speed at which the shutter opens and closes in one second. The most common fps values for video are 24, 25, 29.97, 30, 50 and 60 frames per second;

- rate of fire (burst): how many photos per second the camera can capture in burst. iPhones have a burst mode of 10 frames per second (fps). DSLR and mirrorless cameras have shutter speeds ranging from 3 to 30 fps;

- dynamic range: the difference between the darkest and lightest tones that a camera can capture without losing detail. It is measured in “stops”: each additional stop is equivalent to doubling the brightness. The human eye sees up to 20 stops, according to Sony;

- HDR: technique that combines several shots of the same scene with different exposure values, or stops. In this way it is possible to reveal the details in the darkest areas, and preserve the nuances in the brightest parts;

- depth of field (depth of field, DOF): the part of the photo that is in focus. A greater depth will show nearly everything in the frame clearly, and if the depth of field is shallow, only part of the image will be highlighted;

- sensor bit depth: number of bits that each pixel can store, in relation to the color depth. With 8 bits, 256 different tones can be detected; with 12-bit, this increases to 4,096 tones.

These terms can also appear when referring to cameras:

- shutter: effect that makes moving objects appear tilted when captured by a CMOS sensor, as the camera reads the data line by line, not all at once (as with CCD sensors);

- moire effect: optical distortion in scenes with repeating details, such as striped clothing or vertical lines on buildings, that end up aligning with the sensor’s color filter;

- low pass filter: Reduces moiré, false color and aliasing in photos by blocking high frequency waves. It is located in front of the sensor.

Depth sensors, LiDAR and ToF

Depth sensors measure the distance between an object and the camera. They are used for augmented reality, facial biometrics, autofocus in cameras and bokeh effect in photos.

ToF, LiDAR, and other depth sensors don’t capture photos by themselves, but they can be considered image sensors because they generate depth maps, which show the distance to any object, through the photoelectric effect.

How to clean the camera image sensor?

Move to a location free from dust and wind, remove the lens, and use a portable air blower without touching the sensor. Do not use compressed air. If the dust doesn’t come off: Purchase a camera cleaning solution, put two drops on a cotton swab, and gently wipe the sensor.

How does automatic camera sensor cleaning work?

DSLR or mirrorless cameras have a vibration mechanism that causes dust to fall into a collector on the underside of the sensor. They can use ultrasonic vibration on the filter in front of the sensor or compensate for the sensor itself.

The larger the sensor size, the better the image quality?

Yes, because this usually means that the camera has larger pixels, i.e. more capable of absorbing light.

How to know the sensor size of the cell phone camera?

Use the free Device Info HW app on Android: install it, open it, and tap “Camera” to see the size of the camera sensors. In the case of the iPhone, look up the device name in databases such as GSMArena.

How the digital camera image sensor works

Source: Terra

Rose James is a Gossipify movie and series reviewer known for her in-depth analysis and unique perspective on the latest releases. With a background in film studies, she provides engaging and informative reviews, and keeps readers up to date with industry trends and emerging talents.