Google has announced new features for Android accessibility apps, such as Lookout and Look to Speak

On World Accessibility Awareness Day, celebrated this Thursday (16), the Google announced a series of accessibility features for Android and Google Maps. One of the new features is the arrival in the Lookout application of the possibility of finding objects, signals and more with the mobile phone camera.

- 9 new features coming to Android in 2024

- Gemini will be used to generate subtitles and synopses on Google TV

Location of the object

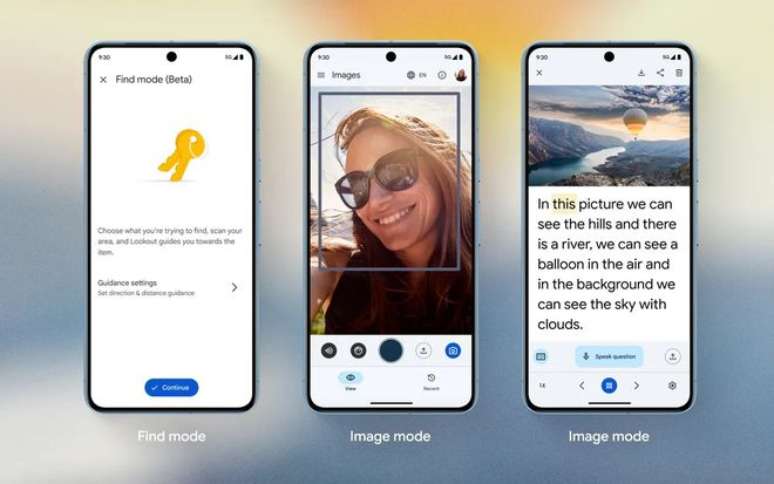

The Lookout app is suitable for people with visual impairments and uses the camera to identify items and read text aloud. The tool has acquired a feature called “Find Mode” (“Search Mode”, in the free translation), in which you can find certain objects, such as bathrooms, seats and tables, by moving your mobile phone around an environment.

The app recognizes the object and reports the direction and distance to it. Furthermore, Google has confirmed that it will use artificial intelligence to generate automatic descriptions of photos taken directly through the application: initially the new feature will only be available in English.

Free text in Look to Speak

The Look to Speak application, which allows you to play certain sentences aloud with just your eyes, has gained a free text mode in which the user can customize expressions. In this case you can choose emojis, photos and other icons to activate each command.

More accessible maps

Google Maps is enriched with a series of new features focused on accessibility. First of all, the reporting of wheelchair accessible places has been extended to the desktop version of the app: previously it was only available on Android and iOS.

The platform GPS Integration with Google Lens for screen reading and voice guidance has also been improved, suitable for visually impaired or blind people. Now the mobile app can say aloud the category of places around you and the distance needed to reach them.

Additionally, businesses and other facilities that support the Auracast protocol can mention this attribute in their Maps profile. Auracast is used to transmit audio via Bluetooth devices and supports several hearing aid models, eliminating the need for another object to hear the aural description of a location.

GameFace Project

OR GameFace Project is a developer platform that lets you control Android using facial gestures. The computer interface was launched last year and the mobile version supports up to 52 different gestures, customizable for every need.

More news

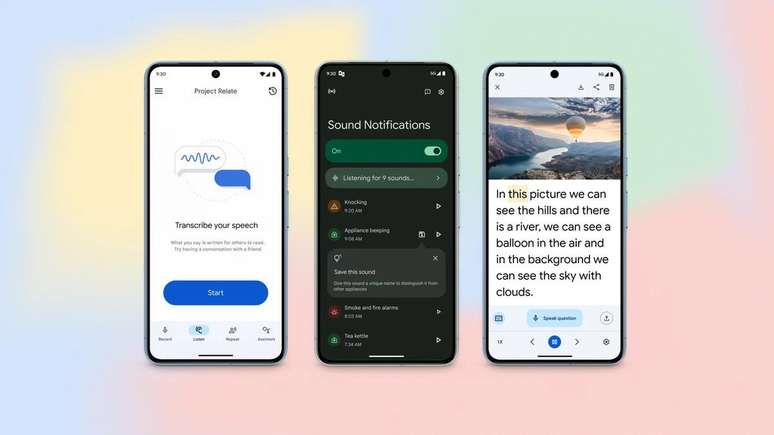

Finally, the Mountain View giant revealed changes on two other platforms. The first is Project Relate, aimed at creating a customizable speech recognition model: the tool has gained more customization features and can import sentences from other applications, such as Google Documents.

Sound notifications, which identify surrounding noises, have a new design and make it easier to find audio. Accessibility was a very important point in the launch of Android 14 and the feat should be repeated with Android 15 — the system also got a new Beta version.

Trends on Canaltech:

- Oropouche fever spreads in Brazil with more than 5 thousand cases

- Elon Musk’s Starlink will be investigated for monopoly in Brazil

- Can dengue mosquitoes transmit oropouche fever?

- AstraZeneca “admits” a rare side effect of the Covid-19 vaccine

- How do I know if I have a fever if I don’t have a thermometer?

- What is the difference between oropouche fever and other arboviruses?

Source: Terra

Rose James is a Gossipify movie and series reviewer known for her in-depth analysis and unique perspective on the latest releases. With a background in film studies, she provides engaging and informative reviews, and keeps readers up to date with industry trends and emerging talents.