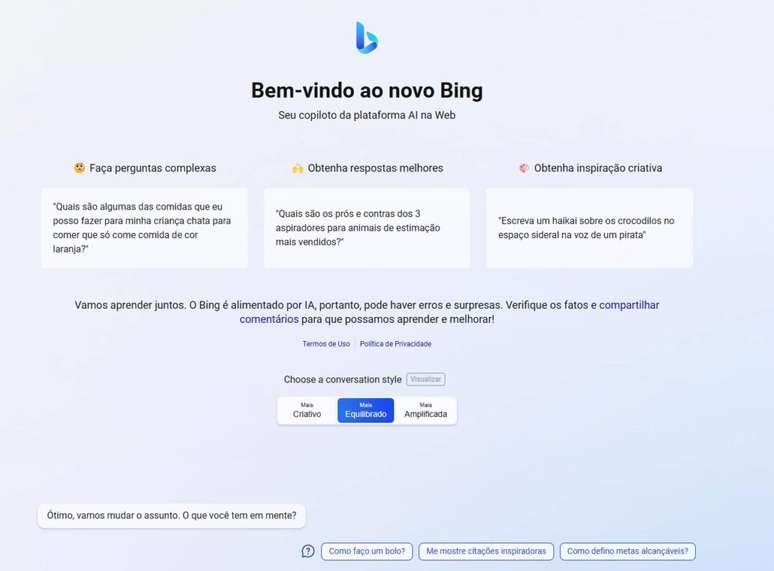

The AI gets three modes, where the user can choose between creative and accurate answers or something in between

OR Bing chat it came promising to help users find information and create text. In some cases, however, the artificial intelligence has begun to behave strangely and give rude answers. To adjust this, Microsoft will provide personality options for the robot: amplified, creative and balanced.

Amplified mode is called accurate in English, which would be a better translation, in my humble opinion. There, Bing Chat will try to search for relevant and factual information. The answers will also be shorter.

In creative mode, they will be more original and imaginative. Microsoft promises surprises and entertainment in this.

Balanced mode, as you can imagine, requires a little of each. It is enabled by default.

Restrictions have left Bing Chat ‘shy’

Microsoft placed restrictions on Bing Chat after the AI was rude and even manipulative to some users.

The problem is that, after that, the robot started refusing to fulfill simple requests. He didn’t seem sure of himself.

Many of these restrictions have been relaxed in recent weeks to free up the chatbot to answer questions again.

The new mode selection should help with this: if the user feels uncomfortable, they can switch to precise mode and have a less talkative robot at their disposal. If he needs ideas, go into creative mode.

Artificial intelligence will have fewer hallucinations, promises Microsoft

In addition, the new Bing Chat update should have reduced the number of cases of hallucinations in responses, according to Mikhail Parakhin, head of web services at Microsoft.

Hallucination is technical jargon for when the language model produces responses with inaccurate or completely fabricated data.

In one such case, Bing simply mixed up the year we are in and refused to correct after getting into a contradiction. The AI insisted that it was the user who was wrong.

Was she creative? We know the need wasn’t there.

With information: The limit.

Microsoft gives the Bing Chat personality the “freedom” to be more creative

Source: Terra

Rose James is a Gossipify movie and series reviewer known for her in-depth analysis and unique perspective on the latest releases. With a background in film studies, she provides engaging and informative reviews, and keeps readers up to date with industry trends and emerging talents.