NVIDIA Launches NIM, Inference Microsystems Suite with Pre-Trained CUDA-Based Partner Models to Accelerate AI Deployment at Scale

NVIDIA presented during GTC 2024 NVIDIA Inference Microservices (NIM), a suite of pre-trained inference models to accelerate AI deployment at scale. In a Q&A session, NVIDIA CEO Jensen Huang said that artificial intelligence is not there hardwarehand Software; the hardware is designed only to enable ideal applications.

- Nvidia | Artificial intelligence is the next game changer for the gaming industry

- What is generative AI?

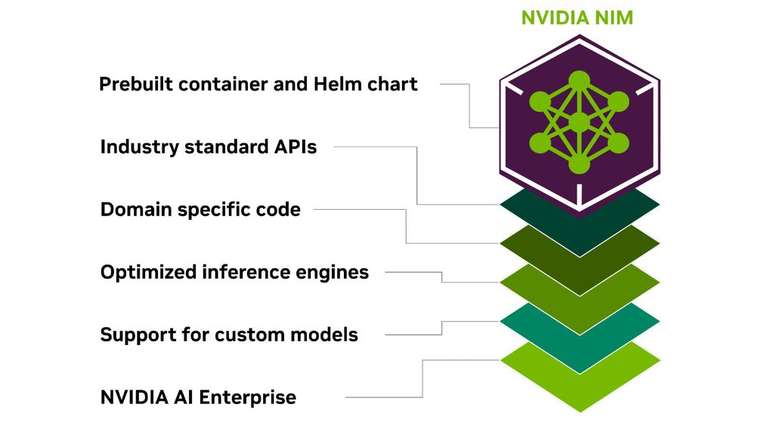

NIM is part of NVIDIA’s full-stack approach to providing the complete ecosystem needed for businesses of all sizes to not only deploy standard AI, but also build their own, tailored to their needs. Different to choose APIs specific development platforms, NIM lets you experiment with a range of open platforms, all optimized for CUDA in a direct partnership between developers and NVIDIA and download the model that best suits each customer’s needs.

Freedom of choice and ease of migration

NVIDIA inference microservices include models for a variety of industries, from healthcare to image analytics to AI virtual assistants to game development. In practice, the pre-trained reduced models are all available in an open format for companies to experiment with both in cloud environments and on their internal systems.

Since they are all already optimized for CUDA, technically any NVIDIA infrastructure can manage them, allowing at least to understand which of these models best suits the company’s needs. Moreover, since they are all included in the package AI company NVIDIANVIDIA itself is responsible for sizing the size of the infrastructure needed to manage these services and directing the customer to the manufacturer partner with the most appropriate solutions.

Another benefit is that NIM-included products’ native support for NVIDIA hardware allows you to simply change the model if the initial one isn’t working as desired. The customer simply downloads the new model and redevelops it internally, using virtually the same processes as the previous product.

In addition to optimizing the scaling of AI operating costs by avoiding investing more resources than strictly necessary, this approach reduces implementation times from months to weeks, according to NVIDIA.

- 🛒 Buy gaming notebook with NVIDIA GeForce RTX GPU at the best price!

- 🛒 Buy NVIDIA GeForce RTX graphics card at the best price!

Trends on Canaltech:

- LG launches the Bi-Split Frio air conditioner capable of cooling two rooms

- After all, Galaxy S22 could receive Galaxy AI

- WhatsApp closes by itself? The bug irritates users this Wednesday (20)

- GTA 6 could arrive as early as February 2025

- 🔥 EXCLUSIVE COUPON | Galaxy S23 Ultra 256 GB at an unmissable price

- WhatsApp will be able to transcribe audio on Android

Source: Terra

Rose James is a Gossipify movie and series reviewer known for her in-depth analysis and unique perspective on the latest releases. With a background in film studies, she provides engaging and informative reviews, and keeps readers up to date with industry trends and emerging talents.