In an interview with the BBC, a young English woman says she discovered that the person responsible for using her image was one of her best friends.

“Jodie” found photos of herself used in pornography deepfake on the Internet – and then I discovered something even more shocking. In an interview on the BBC’s File on 4 radio programme, she spoke about the moment she realized that one of her best friends was responsible for her.

WARNING: Contains offensive language and descriptions of sexual violence.

In spring 2021, Jodie (not her real name) received a link to a pornography website from an anonymous email account.

When she clicked through, she came across explicit images and a video of what appeared to be her having sex with several men. Jodie’s face had been digitally added to another woman’s body, what is known as “deepfake“.

Someone had posted photos of Jodie’s face on a porn site, saying it made him “very horny” and asking if other users of the site could do “fake porn” with her. In exchange for deepfakethe user offered to share more photos of Jodie and details about her.

Speaking about the experience for the first time, Jodie, now in her early twenties, recalls:

“I screamed, I cried, I used the phone violently to understand what I was reading and what I was seeing.”

“I knew it could really ruin my life,” she adds.

Jodie forced herself to browse the porn site and says she felt “her whole world falling apart”.

She had come across a specific image and noticed something terrible.

A series of shocking events

It wasn’t the first time Jodie had been targeted.

In fact, it was the culmination of years of anonymous online abuse.

When Jodie was a teenager, she discovered her name and photos were being used on dating apps without her consent.

This went on for years and in 2019 she even received a Facebook message from a stranger saying he would meet her at Liverpool Street station in London for a date.

She told the stranger that it wasn’t her she was talking to. And he remembers that she was “nervous” because he knew everything about her, and had managed to find her online. She followed her on Facebook after “Jodie” on the dating app stopped responding.

In May 2020, during the confinement imposed by the Covid-19 pandemic in the UK, Jodie was also alerted by a friend to a number of Twitter accounts posting photos of her, with captions suggesting she was a prostitute.

“What would you like to do with little Jodie?” reads the caption alongside the image of Jodie in a bikini, taken from her private social media account.

The Twitter accounts responsible for posting these images had names like “slut exposer” and “pervert boss.”

Jodie had shared all the images used on her social media with close friends and family, without anyone else.

She later discovered that these accounts were also posting images of other women she knew from university, as well as from her hometown of Cambridge.

“At that moment, I had a strong feeling that I was in the center of the situation and that this person was trying to harm me,” he says.

Counterattack

Jodie began contacting the other women in the photos to warn them, including a close friend we’ll call Daisy.

“I just felt bad,” Daisy says.

Together, the friends discovered several other Twitter accounts posting photos of themselves.

“The more we looked, the worse it got,” Daisy says.

He messaged Twitter users, asking where they got the photos. The response was that the photos were “uploads” by anonymous senders who wanted them shared.

“It’s an ex-boyfriend or someone who is attracted to you,” one user responded.

Daisy and Jodie created a list of all the men who both followed them on social media and who had access to both photo albums.

Friends concluded that he must be Jodie’s ex-boyfriend. She faced him and blocked him.

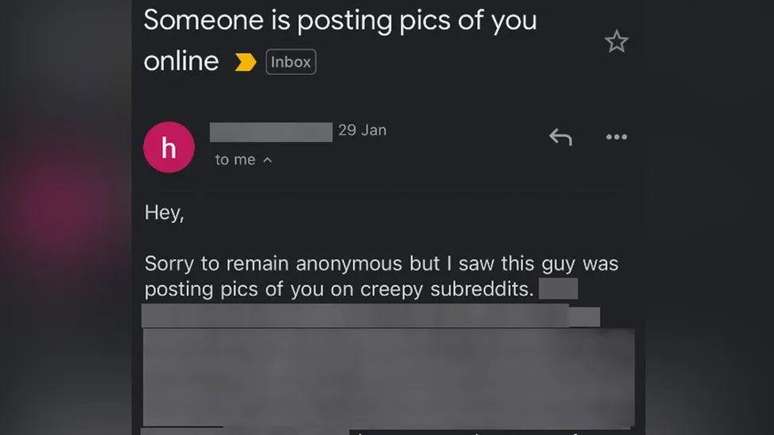

For a few months the posts stopped, until someone got in touch via an anonymous email.

“Sorry to remain anonymous,” the email said, “but I saw this guy was posting photos of himself on creepy subreddits. I know this must be really scary.”

Jodie clicked on the link and was taken to the social network Reddit, where a user had posted photos of Jodie and two of her friends, numbering them: 1, 2 and 3.

Other people online were asked to play a game: which of these women would you have sex with, marry or kill.

Under the post, 55 people had already commented.

The photos used on the site were recent and had been published after Jodie blocked her ex-boyfriend. The friends then realized they had blamed the wrong person.

Six weeks later, the same anonymous person got in touch again via email, this time to advise of the incident deepfake.

“The Ultimate Betrayal”

When compiling the list, Jodie and Daisy left off some men they completely trusted, such as family members and Jodie’s best friend, Alex Woolf.

Jodie and Alex formed a strong friendship when they were teenagers – it was their shared love of classical music that brought the two together.

Jodie sought comfort from Woolf when she discovered her name and photos were being used on dating apps without her consent.

Woolf graduated with a degree in music from Cambridge University and won the BBC Young Composer of the Year award in 2012, as well as appearing on the UK program Mastermind in 2021.

“He [Woolf] I was very aware of the problems women face, especially on the internet,” says Jodie.

“I really thought he was partisan.”

However, when he saw the pornographic images deepfakethere was a profile photo of him with an image of King’s College, University of Cambridge, behind.

He clearly remembered when it was taken and that Woolf was also in the photo. She was also the only person she had shared the image with.

It was Woolf who offered to share more original photos of Jodie in exchange for their transformation deepfake.

“He knew the profound impact he was having on my life,” Jodie says. “And yet she did it.”

“Completely embarrassed”

In August 2021, 26-year-old Woolf was convicted of taking images of 15 women, including Jodie, from social media and uploading them to pornography websites.

He was sentenced to 20 weeks in prison, supervised for two years and ordered to pay each of his victims £100 compensation.

Woolf told the BBC that he is “totally ashamed” of the behavior that led to his conviction – and that he is “deeply sorry” for his actions.

“I think about the suffering I have caused every day and I have no doubt that I will continue to do so for the rest of my life,” he said.

“There is no excuse for what I did, nor can I adequately explain why I acted so despicably at the time based on those impulses.”

Woolf denies having anything to do with the harassment Jodie suffered before the events for which he was accused.

For Jodie, finding out what her friend had done was “the ultimate betrayal and humiliation”.

“I replayed every conversation we had, when he had comforted me, supported me and been kind to me. They were all lies,” she says.

We contacted X (formerly Twitter) and Reddit about the posts. OX did not respond, but a Reddit spokesperson said: “Non-consensual intimate images (NCIM) have no place on the Reddit platform. The subreddit in question has been banned.” The porn site was also taken offline.

In October 2023, sharing pornographic material deepfake it became a criminal offense as part of the Online Safety Act in the UK.

There are tens of thousands of videos deepfake on line. A recent survey found that 98% are pornographic.

Jodie, however, is outraged by the fact that the new law does not criminalize those who ask others to create a deepfake, which is what Alex Woolf did. It is also not illegal to create a deepfake.

“This affects thousands of women and we need to have the right laws and tools to stop people from doing this,” she concludes.

Source: Terra

Rose James is a Gossipify movie and series reviewer known for her in-depth analysis and unique perspective on the latest releases. With a background in film studies, she provides engaging and informative reviews, and keeps readers up to date with industry trends and emerging talents.