The Sírio-Libanês hospital, in São Paulo, uses a system that does not allow the recruiter to know whether the person applying for the vacancy is a man, a woman, a white or a black

Businesses are using more and more artificial intelligencesuch as the ATS (applicant tracking system), to select resumes and hire professionals. It’s a way to speed up the process, but robots, which are programmed by humans, can fail and… reproduce prejudices of race, gender and others.

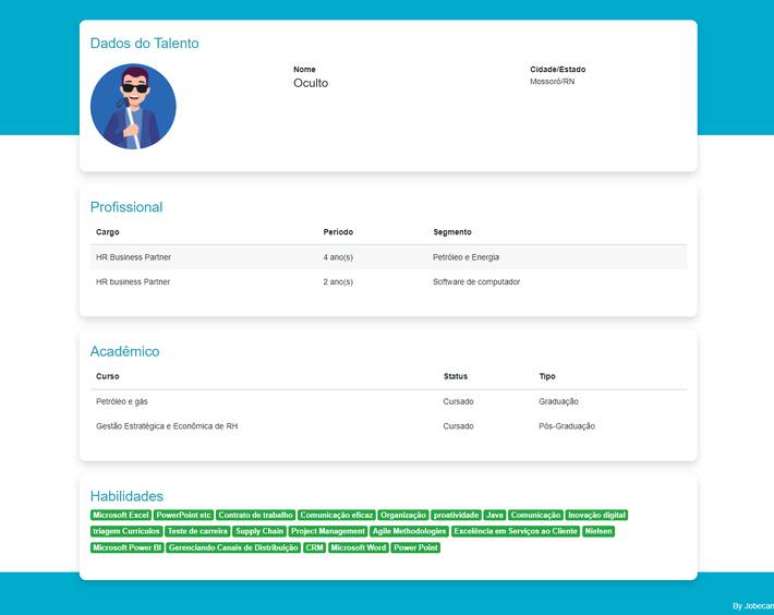

To avoid this, some systems already allow the whole process to be anonymous. Avatars are used to change the candidate’s image and voice in a virtual interview. With this improvement in artificial intelligence, neither the robot nor the human picker can tell if it is a black or white person, woman or man, and what their accent is, for example. The Sírio-Libanês hospital in São Paulo is already using the system in some wards.

“Subconscious bias is a brain trigger that leads people to commit prejudicial acts. When we say technology can be biased, it’s because we have humans powering these machines,” says Cammila Yochabell, CEO and founder of Jobecam, a human resources platform that offers tools with anonymous videos and resumes for companies like Sírio.

Amazon’s algorithm preferred men

In 2018, Amazon faced an automated bias problem. The algorithm developed to select candidates was not neutral and gave priority to men. The algorithm gave low scores to resumes that contained the word “female”.

After the international fallout, the company admitted that the system incited gender discrimination.

Today there are still systems that look for professional figures similar to those that already exist in a given company, damaging diversity.

“This is unconscious affinity bias. It’s one of the reasons there are so many straight white men in leadership positions,” says Yochabell.

How artificial intelligence avoids bias

The artificial intelligence that fights prejudice focuses on the candidate’s skills and competences, regardless of the analysis of aspects such as gender, race and ethnicity.

Virtual sorting differs from other AI systems in three ways:

- anonymous CV: Applicants’ personal information is hidden. The analysis focuses on skills and experience.

- Pre-recorded anonymous interview: There is no interaction between recruiter and professional. The persona is revealed only after approval for a next step.

- Live anonymous interview: Recruiters interact in real time with candidates. Used for group dynamics or with managers.

An important detail is that in all modes the face becomes an avatar, the person’s name is adapted to the name of a city or a country and the voice is robotized (with up to 4 modulation options).

“We mischaracterize candidates to improve their skills,” says the founder of Jobecam.

During the live interview, the company chooses whether to reveal the candidate’s face after 10 minutes of conversation or to remain anonymous.

The Syrian changes model to have more diversity

The hospital Syrian-Lebanese is one of the companies adopting the model in some departments of the company.

“This change has come with our agenda of offering a more diverse environment for the hospital sector, where there are a variety of criteria. We focus on bringing the right practitioner to the right position, regardless of gender, colour, sexual orientation and religious belief “, says Pida Lamin, director of Peoples and Culture in Sírio-Libanês.

From August 2022 to March this year, more than 90 professionals participated in this type of selection. 21 were hired, in the following proportion: 90% are women, 51% represent blacks, 30% LGBTQIA+ and 10% are professionals over 50 years old.

The hospital says it did not have data on hiring rates prior to this system, because it did not use self-reporting of gender and race among applicants.

Recruitment in this format is incorporated into five departments of the institution (social engagement, marketing, technology and innovation, people management and population health).

Previously, Sírio used the traditional model: by sending a resume, the recruiter called the professional and then there were the interviews. The manager always knew if the candidate was a man or a woman and if he had children.

“It was the starter model with personal issues. Now, we’ve changed the process to focus on the technical skills to do the job well,” says Pida.

Another change is sorting. In this phase the manager has access only to previous experiences and to what satisfy the vacancy. Personal information such as name, gender, location and photograph are hidden.

What matters for AI-generated filters are technical and communicative skills, such as assertiveness, proactivity, and effective communication. The professional is eliminated in this phase only if he does not present characteristics for the technical profile of the position offered.

Bias arises in companies, not in artificial intelligence

Another company that uses artificial intelligence to expand selections with different candidates is Portão 3, a corporate expense management platform, the HR sector uses the LinkedIn as port of withdrawal. “We direct the filters so that there is no specific screening, for example, by gender, color and race,” says Marcello Amaro, CHRO (HR Director) at Portão 3.

However, he adds that these choices come from the recruiter, as the fields chosen at this stage can generate unconscious AI bias during selection.

“It is much more important that HR breaks down prejudices, teaching AI how to work, rather than AI itself being the monster of the business,” Amaro defends.

In a hypothetical selection, without the automated system, Amaro would take five minutes to complete a resume. With AI, the CHRO already receives candidates with an experience profile as required by the vacancy.

Experts say the responsibility lies not exactly with the artificial intelligence, but with whoever programs it. One solution is to identify people’s biases.

“Our role is to mitigate the unconscious biases that feed the machines, especially in talent selection,” says Camila Yochabell.

He says you need to balance the efficiency of AI and what can become a bias in recruiting.

Source: Terra

Rose James is a Gossipify movie and series reviewer known for her in-depth analysis and unique perspective on the latest releases. With a background in film studies, she provides engaging and informative reviews, and keeps readers up to date with industry trends and emerging talents.

![It All Begins Here: What’s in store for Tuesday 28 October 2025 Episode 1294 [SPOILERS] It All Begins Here: What’s in store for Tuesday 28 October 2025 Episode 1294 [SPOILERS]](https://fr.web.img5.acsta.net/img/14/73/14737ec10a5b71ce2d84fc54b311117f.jpg)