Understand what Amdahl’s law is, used in performance scaling in CPU and other microprocessor code processing

Postulated by computational architect Gene Amdahl in 1967, Amdahl’s Law is a mathematical analysis that describes how increasing the number of processors affects the speed of processors. run the codes on a PC. In addition to this, the Act helps to determine the theoretical limit of performance increases according to the legislation type of code executed for those fries.

Amdahl’s law is fundamental both for programming and for the design of new processors because it allows you to scale the amount to be added more cores or discussions will impact performance depending on the application.

This is because, in modern computing, one of the main points of performance improvement is in the parallel processing of codes, similar to pipeline of the RISC architecture.

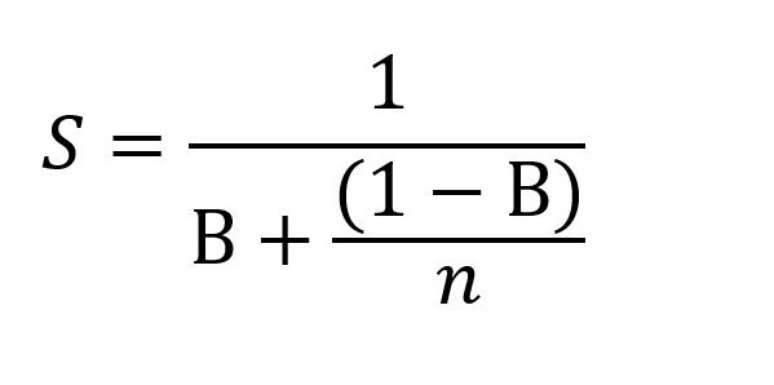

Mathematical description of Amdahl’s law

In a very simplified way, the formula of Amdahl’s Law establishes the relationship between the “to accelerate“, or increase in speed, in relation to the increase in number of processorsso that the gain is limited to the fraction of time the new processors are actually used.

- Where:

- S is the performance improvement (to accelerate)

- B is the strictly sequential proportion of the code

- No is the number of discussions under processing

Increment limited by parallelism potential

All computer code contains, to some extent, instructions that require sequential processing, that is, that cannot be broken down for sequential processing. discussions many different ones. The higher the percentage of code parallelism (P), the greater the performance gain (S) as the number of processors increases.

In a theoretical example, even a program with only 10% (0.1) of its code strictly sequential, the performance gain with infinite processors would be at most 10 times, given that:

- S=1/[0,1+(1-0,1)/∞] → S=1/[0,1+(0,9)/∞]

Since any number divided by ∞ tends to ZERO, then we have:

- S=1/(0,1+0) → S=1/0.1→ S=10

In short, this means that even in a highly parallelizable program, like the one in the example above, it’s useless add many discussionsas the performance gain is limited.

Applications of Amdahl’s law in projects

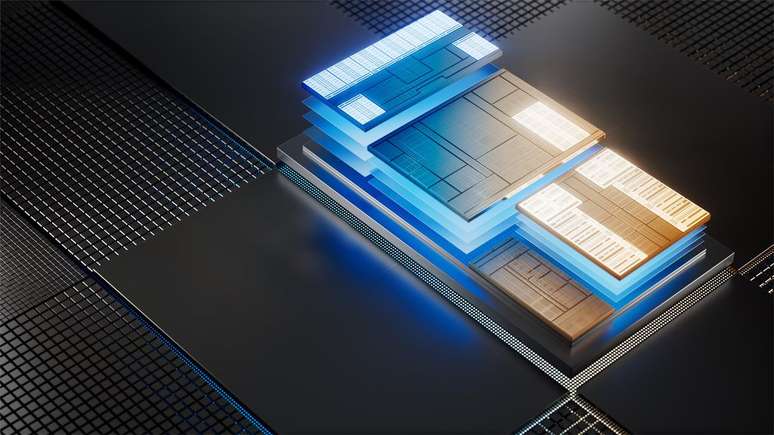

Of course, not all programs have such a high parallelism rate, which means that, for some specific tasks, it is not even worth investing much in multi threaded. In fact, it is based on Amdahl’s law that manufacturers use to define the proportion of E core (without hyperthread) and P nuclei on Intel Alder Lake chips onwards, for example.

Among the main practical applications of Amdahl’s Law we have:

- Performance evaluation: Amdahl’s law can be used to evaluate the performance of a system. Taking into account the time taken by a program to execute and its degree of parallelism, Amdahl’s Law allows us to calculate the to accelerate maximum.

- Design decisions: When designing new systems, Amdahl’s Law helps you make informed decisions about where to invest resources. If only a small part of the code that will be executed can be parallelized, it may be more cost-effective to invest in systems with higher frequencies than in solutions with a large number of cores.

- Capacity planning: In a cloud computing environment, Amdahl’s law helps in capacity planning. When sizing a rack in a databaseit is possible to determine the ideal number of instances needed for a service depending on the specific application.

- Code optimization (parallelization): In the development of Software, Amdahl’s law helps identify the parts of the code that would be most beneficial to optimize. If a part of the code that consumes a lot of execution time cannot be broken down into parallelizable instructions, it may be more advantageous to focus on optimizing other parts, making better use of the resources of the platform that will run that code.

Challenges of code parallelization

Whereas most modern processors already have multiple physical cores, some of which they support multi threaded, code optimization is essential to maximize performance. However, this optimization depends on some factors that can pose challenges in the process.

- Identifying parallelism: As already mentioned, all code will inevitably have parts that require sequential execution. Identifying what fraction of this code can be parallelized is the first step in defining to what extent such an application is still viable in modern systems, as well as how scalable it can be even after optimization.

- Synchronization: Even in program parts that can be executed in parallel, in some cases they can act on one or more common variables. Therefore, it is necessary to synchronize the turnaround times of interdependent parts, ensuring that these operations are performed without inconsistencies.

- Interprocess communication: For the same reason, different parts of the program may need to communicate with each other, either because they act on the same variables or because they are interdependent processes. Establishing interprocess communication can also interfere with ultimate performance, especially in distributed systems, such as cloud server clusters, where communication between cores in different units is slower and varies depending on the interconnector.

- Load balance: In a parallel computing environment, ultimate performance will inevitably suffer if one core is overloaded while the others are idle. In this way, optimizing the pipeline code to balance the workload of each core/row it is extremely important to ensure optimal performance.

Regardless of the challenges of the parallelization process, the code optimization It is essential to the advancement of modern computing as it enables dramatic improvements in performance.

Therefore, Amdahl’s Law, in almost 60 years, is a factor that, added to other key elements, has enabled progress in architecture. hardware and software, as it not only scales, but also evaluates the revenue potential in future and already installed systems.

Trends on Canaltech:

- The 50 funniest Google Assistant jokes

- The 20 best horror films of the new generation

- Earth’s “sister” planet has discovered that it could be habitable

- 5 free AIs to create the voice

- ASUS launches ROG NUC, super powerful laptop PC with RTX 4070

- The startup believes in head transplant; It will be possible?

Source: Terra

Rose James is a Gossipify movie and series reviewer known for her in-depth analysis and unique perspective on the latest releases. With a background in film studies, she provides engaging and informative reviews, and keeps readers up to date with industry trends and emerging talents.